6 Trends on ‘Perception’ for ADAS/AV

by Junko Yoshida, EE Times | Automotive Designline

BRUSSELS — The upshot of AutoSens 2019 held here last week was that there’s no shortage of technology innovation, as tech developers, Tier 1’s and OEMs are still in a hunt for “robust perception” that can work under any road conditions — including night, fog, rain, snow, black ice, oil and others.

Although the automotive industry has yet to find a single silver bullet, numerous companies pitched their new perception technologies and product concepts.

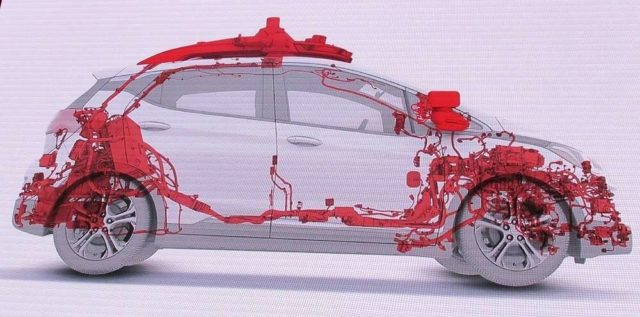

Cruise' latest test vehicle that started to roll off the GM production line is loaded with sensors, as indicated here in red. (Source: Cruise)

At this year’s AutoSens in Brussels, assisted driving (ADAS), rather than autonomous vehicles (AV), was in sharper focus.

Clearly the engineering community is no longer in denial. Many acknowledge that a big gap exists between what’s possible today and the eventual launch of commercial artificial intelligence-driven autonomous vehicles — with no human drivers in the loop.

Just to be clear, nobody is saying self-driving cars are impossible. However, Phil Magney, founder and principal at VSI Labs, predicted that “Level 4 [autonomous vehicles] will be rolled out within a highly restricted operational design domain, [and built on] very comprehensive and thorough safety case.” By “a highly restricted ODD,” Magney said, “I mean specific road, specific lane, specific operation hours, specific weather conditions, specific times of day, specific pick-up and drop-off points, etc.”

Asked if the AI-driven car will ever attain “the common sense understanding” — knowing that it is actually driving and understanding its context, Bart Selman, a computer science professor at Cornell University who specializes in AI, said at the conference’s closing panel: “It will be at least 10 years away…, it could be 20 to 30 years away."

Meanwhile, among those eager to build ADAS and highly automated cars, the name of the game is in how best they can make vehicles see.

The very foundation for every highly automated vehicle is “perception” — knowing where objects are, noted Phil Koopman, CTO of Edge Case Research and professor at Carnegie Mellon University. Where AVs are weak, compared to human drivers, is “prediction” — understanding the context and predicting where the object it perceived might go next, he explained.

Moving smarts to the edge

Adding more smarts to the edge was a new trend emerging at the conference. Many vendors are adding more intelligence at the sensory node, by fusing different sensory data (RGB camera + NIR; RGB + SWIR; RGB + lidar; RGB + radar) right on the edge.

However, opinions among industry players appear split on how to accomplish that. There are those who promote sensor fusion on the edge, while others, such as Waymo, prefer a central fusion of raw sensory data on a central processing unit.

With the Euro NCAP mandate for the Driver Monitoring System (DMS) as a primary safety standard by 2020, a host of new monitoring systems also showed up at AutoSens. These include systems that monitor not only drivers, but passengers and other objects inside a vehicle.

A case in point was the unveiling of On Semiconductor’s new RGB-IR image sensor combined with Ambarella’s advanced RGB-IR video processing SoC and Eyeris’ in-vehicle scene understanding AI software.

NIR vs SWIR

The need to see in the dark — whether inside or outside a vehicle — indicates the use of IR.

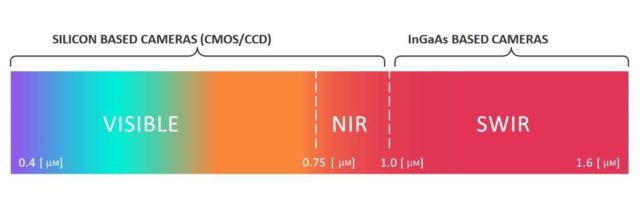

While On Semiconductor’s RGB-IR image sensor uses NIR (near infrared) technology, Trieye, which also came to the show, went a step further by showing off a SWIR (short wave-based infrared) camera.

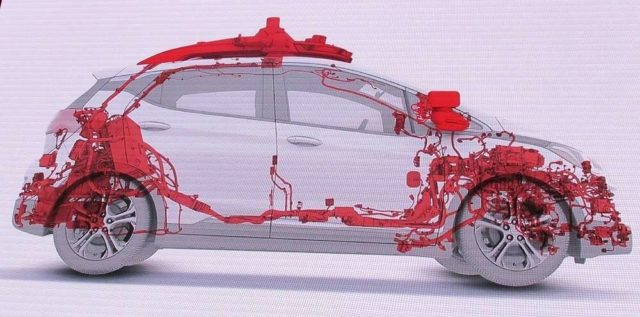

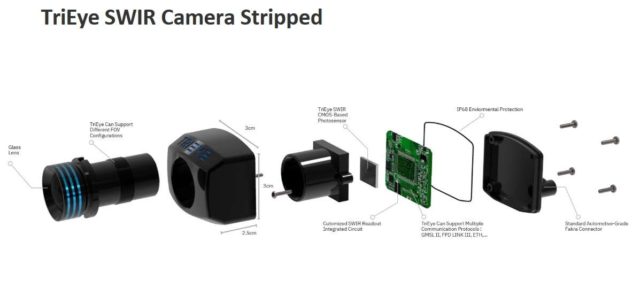

What is short-wave infrared (SWIR)? (Source: Trieye)

SWIR’s benefits include its ability to see objects under any weather/lighting conditions. More important, SWIR can also identify a road hazard — such as black ice — in advance, since SWIR can detect a unique spectral response defined by the chemical and physical characteristics of each material.

The use of SWIR cameras, however, has been limited to military, science and aerospace applications, due to the extremely high cost of the indium gallium arsenide (InGaAs) used to build it. Trieye claims it has found a way to design SWIR by using CMOS process technology. “That’s the breakthrough we made. Just like semiconductors, we are using CMOS for the high-volume manufacturing of SWIR cameras from Day 1,” said Avi Bakal, CEO and co-founder of Trieye. Compared to InGaAs sensor that costs more than $8,000, Bakal said that a Trieye camera will be offered “at tens of dollars.”

Lack of annotated data

One of the biggest challenges for AI is the shortage of training data. More specifically, “annotated training data,” said Magney. “The inference model is only as good as the data and the way in which the data is collected. Of course, the training data needs to be labeled with metadata and this is very time consuming.”

AutoSens featured lively discussion about the GAN (generative adversarial networks) method. According to Magney, in GAN, two neural networks compete to create new data. Given a training set, this technique reportedly learns to generate new data with the same statistics as the training set.

Drive.ai, for example, is using deep learning to enhance automation for annotating data, to accelerate the tedious process of data labeling.

In a lecture at AutoSens, Koopman also touched on the arduous challenges in annotating data accurately. He suspects that much data remains unlabeled because only a handful of big companies can afford to do it right.

Indeed, AI algorithm startups attending the show acknowledged the pain that comes with paying third parties for annotating data.