The FFT is Fifty

Their 1965 paper starts with the unassuming words “An efficient method for the calculation of the interactions of a 2^m factorial experiment was introduced by Yates and is widely known by his name.” The paper does not use the word “fast” even once. But the algorithm described in that paper written by James Cooley and John Tukey[1] came to be known as the Fast Fourier Transform (FFT), and turned out to be just what the world was waiting for. Overnight, in universities and laboratories around the world, scientists and engineers began writing code and building hardware to implement the FFT. Fifty years later, versions of that algorithm are routinely used, often tacitly assumed to be available to programmers. Any computation that requires (either as an end, or as an intermediate step) the unraveling of the frequency content of information carrying signals benefits from the enormous speedup offered by the FFT. Other than the speedup, the FFT does not offer any computational result that was not available before its discovery. However, were it not for this amazing algorithm, it would be practically impossible to filter cell-phone signals, to compress movie files, do spectroscopy, take magnetic resonance imaging scans, perform quantum computing, and solve differential equations, among other things. The Cooley-Tukey paper created a surge in products that suddenly became possible, and often even became practical to operate in real-time.

Although the idea behind FFT can be explained without using complex math (it involves complex numbers, so I guess that does make the math complex), no attempt to do so will be made here. Suffice it to say that the algorithm belongs to a class of mathematical calculations that can either be performed in a straightforward manner, or can be solved by dividing the calculations into smaller steps. Cooley and Tukey showed how the dividing could be done in a manner such that the total effort needed to compute the simpler steps is much less than the effort that would be needed to tackle the computation without such division. There is a wealth of deep mathematics underlying the FFT algorithm. But let us remember that John Tukey, ever the pragmatic engineer, is said to have declared “I wouldn’t want to fly in a plane whose design depended on whether a function was Riemann or Lebesgue integrable.”

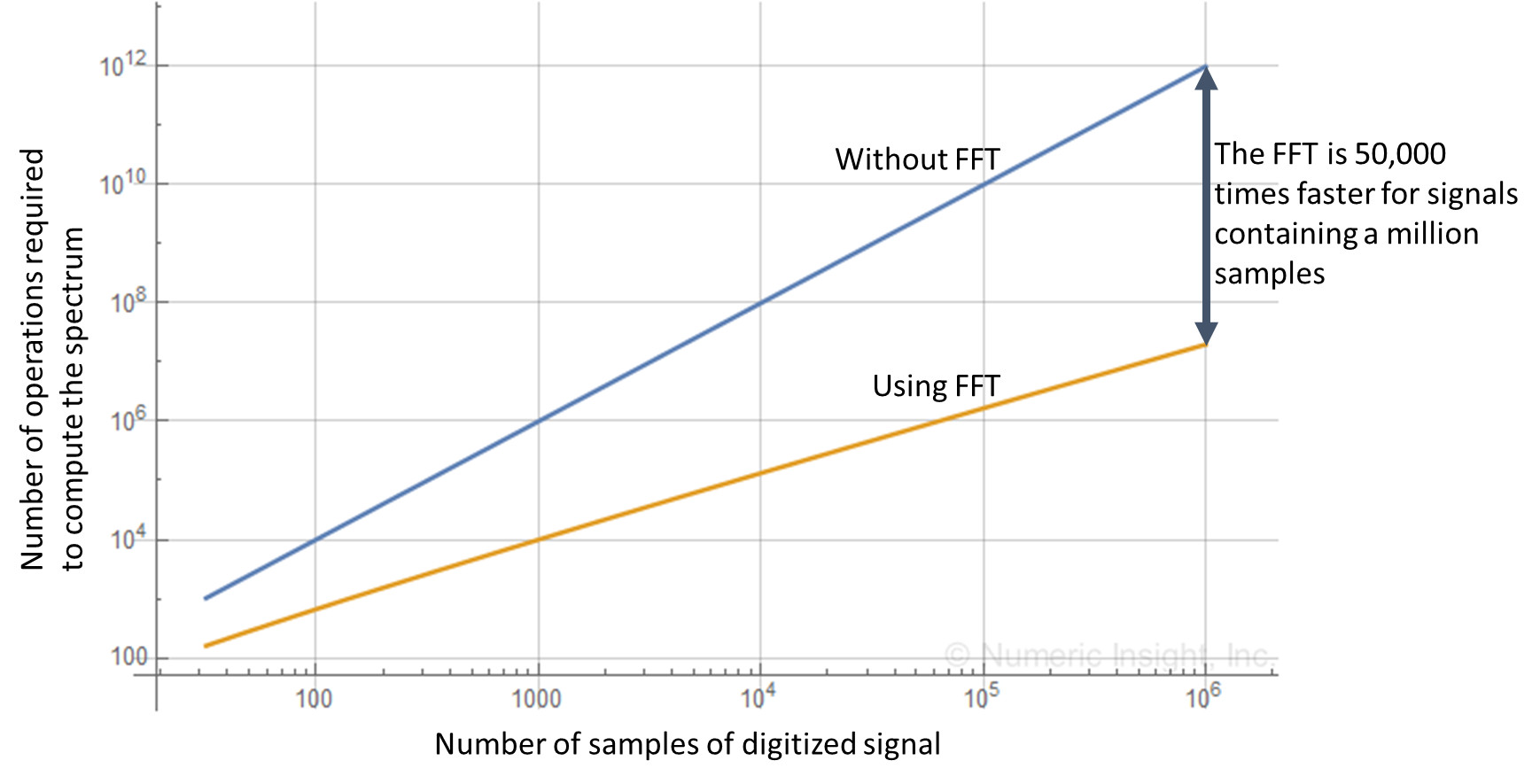

For signals with a million samples, the gain in speed obtained by virtue of the magic of FFT can be upwards of 50,000. To appreciate this speedup ratio, consider some calculation that takes 1 second to complete on your computer. To most of us, this would seem sluggish. In today’s world we expect our devices to be instantly responsive, but we might tolerate such slowness once in a while, say when we are applying some fancy effects to produce an irresistible cat video. Now, imagine that same computation being 50,000 times slower i.e. taking almost 14 hours to execute.

For decades, the FFT algorithm and its direct variants have ruled the world of signal processing. It is only recently that researchers[2] at MIT came up with an algorithm which takes advantage of a special property of real-life signals (sparsity) to achieve significantly faster performance. Although the speedup achieved (relative to the FFT, not relative to the straightforward computation) is not as spectacular, it is still useful in many practical applications. For example, it has the potential to reduce the time patients spend inside a MRI machine from an agonizing one hour to a bearable 20 minutes. There are many other applications where increased efficiency of computing the spectrum leads to other benefits such as longer battery life.

My introduction to the FFT came via a classic textbook, Athanasios Papoulis’s Signal Analysis. In graduate school, I remember scribbling pages after pages of formulas as I worked to absorb the concept. When it all fell into place, the feeling was as exhilarating as though I had personally come up with the algorithm myself. Wonderful applications suggested themselves, and it seemed that the world could be saved by my new found understanding of the FFT.

I was to be humbled because I was soon introduced to an application made possible by the FFT. Transmultiplexers are computational devices that allow a single wire (or radio channel) to simultaneously transmit thousands of speech signals in a way that allows them to be separated by individual receivers. Remarkably, FFT figures into the calculations needed to pull off this feat. Unlike common applications I had encountered at that time where the input to the FFT is a sequence of samples of a single signal as it is generated in time, in the transmultiplexer calculations, the input to the FFT at each time step is the sequence formed by gathering samples of several independent speech signals at a given instant of time. It amazed me that you could, in a manner of speaking, meaningfully combine and take apart independent words uttered by a crowd at a given time instant. Now this is one application I could not have come up with.

I know that many engineers and scientists have their own personal story of their first encounter with the FFT. Well, we are nerds are we not? Can we share?

————

[1] Cooley, James W.; Tukey, John W. (1965). “An algorithm for the machine calculation of complex Fourier series”. Mathematics of Computation, 19: 297–301.

[2] Haitham Hassanieh, Piotr Indyk, Dina Katabi, Eric Price. (2012). “Simple and Practical Algorithm for Sparse Fourier Transform”. ACM-SIAM Symposium on Discrete Algorithms, Kyoto Japan

1 Comment

Shashi,

Interesting article!

Every 4G/LTE-enabled cellphone now incorporates an FFT. Professor Gilbert of MIT stated with regards to the FFT, that “This is the most important numerical algorithm of our lifetime.” (“Wavelets,” American Scientist, Vol. 82, No. 3, 1994, pp. 250-255.)

Jonathan