Self-driving cars will have to decide who should live and who should die

Here’s who humans would kill

By Carolyn Y. Johnson

October 24, 2018

A massive experiment asked users who a self-driving car should save — or not — in various ethical dilemmas. (Moral Machine/MIT)

Imagine this scenario: the brakes fail on a self-driving car as it hurtles toward a busy crosswalk.

A homeless person and a criminal are crossing in front of the car. Two cats are in the opposing lane.

Should the car swerve to mow down the cats or plow into two people?

It’s a relatively straightforward ethical dilemma, as moral quandaries go. And people overwhelmingly prefer to save human lives over animals, according to a massive new ethics study that asked people how a self-driving car should respond when faced with a variety of extreme trade-offs — dilemmas to which more than 2 million people responded. But what if the choice is between two elderly people and a pregnant woman? An athletic person or someone who is obese? Passengers vs. pedestrians?

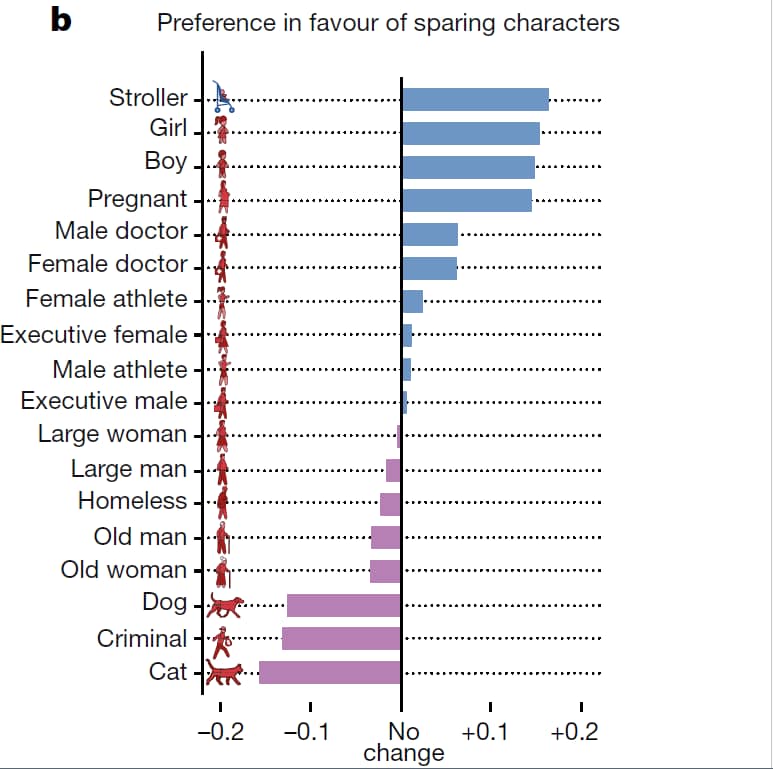

The study, published in Nature, identified a few preferences that were strongest: People opt to save people over pets, to spare the many over the few and to save children and pregnant women over older people. But it also found other preferences for sparing women over men, athletes over obese people and higher status people, such as executives, instead of homeless people or criminals. There were also cultural differences in the degree, for example, that people would prefer to save younger people over the elderly in a cluster of mostly Asian countries.

A survey posed moral dilemmas to people, asking when autonomous vehicles should spare characters. They found people had a strong preference for sparing children and pregnant women, and little preference for sparing pets and criminals. (Awad, et al in Nature)

“We don’t suggest that [policymakers] should cater to the public’s preferences. They just need to be aware of it, to expect a possible reaction when something happens. If, in an accident, a kid does not get special treatment, there might be some public reaction,” said Edmond Awad, a computer scientist at the Massachusetts Institute of Technology Media Lab who led the work.

The thought experiments posed by the researcher’s Moral Machine website went viral, with their pictorial quiz taken by several million people in 233 countries or territories. The study, which included 40 million responses to different dilemmas, provide a fascinating snapshot of global public opinion as the era of self-driving cars looms large in the imagination, a vision of future convenience propagated by technology companies that has recently been set back by the death of a woman in Arizona who was hit by a self-driving Uber vehicle.

Awad said one of the major surprises to the research team was how popular the research project became. It got picked up by Reddit, was featured in news stories and influential YouTube users created videos of themselves going through the questions.

The thought-provoking scenarios are fun to debate. They build off a decades-old thought experiment by philosophers called “the trolley problem,” in which an out-of-control trolley hurtles toward a group of five people standing in its path. A bystander has the option to let the car crash into them, or divert it onto a track where a single person stands.

Outside researchers said the results were interesting, but cautioned that the results could be overinterpreted. In a randomized survey, researchers try to ensure that a sample is unbiased and representative of the overall population, but in this case the voluntary study was taken by a population that was predominantly younger men. The scenarios are also distilled, extreme and far more black-and-white than the ones that are abundant in the real world, where probabilities and uncertainty are the norm.

“The big worry that I have is that people reading this are going to think that this study is telling us how to implement a decision process for a self-driving car,” said Benjamin Kuipers, a computer scientist at University of Michigan, who was not involved in the work.

Kuipers added that these thought experiments may frame some of the decisions that carmakers and programmers make about autonomous vehicle design in a misleading way. There’s a moral choice, he argued, that precedes the conundrum of whether to crash into a barrier and kill three passengers or to run over a pregnant woman pushing a stroller.

“Building these cars, the process is not really about saying, ‘If I’m faced with this dilemma, who am I going to kill.’ It’s saying, ‘If we can imagine a situation where this dilemma could occur, what prior decision should I have made to avoid this?” Kuipers said.

Nicholas Evans, a philosopher at the University of Massachusetts at Lowell, pointed out that while the researchers described their three strongest principles as the ones that were universal, the cutoff between those and the weaker ones that weren’t deemed universal was arbitrary. They categorized the preference to spare young people over elderly people, for example, as a global moral preference, but not the preference to spare those who are following walk signals vs. those who are jaywalking, or to save people of higher social status.

And the study didn’t test scenarios that could have raised even more complicated questions by showing how biased and problematic public opinion is as an arbiter of ethics, for example by including the race of the people walking across the road. Laws and regulations should not necessarily reflect public opinion, ethicists say, but protect vulnerable people against it.

Evans is working on a project that he said has been influenced by the approach taken by the MIT team. He says he plans to use more nuanced crash scenarios, where real-world transportation data can provide a probability of surviving a T-bone highway crash on the passenger side, for example, to assess the safety implications of self-driving cars on American roadways.

“We want to create a mathematical model for some of these moral dilemmas and then utilize the best moral theories that philosophy has to offer, to show what the result of choosing an autonomous vehicle to behave in a certain way is,” Evans said.

Iyad Rahwan, a computer scientist at MIT who oversaw the work, said that a public poll shouldn’t be the foundation of artificial intelligence ethics. But he said that regulating AI will be different from traditional products, because the machines will have autonomy and the ability to adapt — which makes it more important to understand how people perceive AI and what they expect of technology.

“We should take public opinion with a grain of salt,” Rahwan said. “I think it’s informative.”

This article originally published at Washingtonpost.com on October 24, 2018.

No Comments